Quick Peek at Active Implementation Framework the Art and Sciene of Implementiation

Editor'south annotation: This brief is one in a serial well-nigh the v key components of testify-based policymaking as identified in " Prove-Based Policymaking: A Guide for Constructive Government," a 2014 report by the Pew-MacArthur Results Commencement Initiative. The other components are plan assessment, budget development, outcome monitoring, and targeted evaluation.

Overview

There is a growing consensus that rigorous show and data can and should be used, whenever possible, to inform critical public policy and upkeep decisions. In areas ranging from criminal justice to educational activity, authorities leaders are increasingly interested in funding what works, while programs that lack evidence of their effectiveness are being advisedly scrutinized when budgets are tightened. As the employ of show-based interventions becomes more than prevalent, there is an increasing recognition that it will be critical to ensure that these programs are finer delivered. A large torso of research now shows that well-designed programs poorly delivered are unlikely to attain the outcomes policymakers and citizens expect.1

Government leaders can best ensure that they run across the benefits of evidence-based programs past building chapters that supports effective implementation. This brief, one in a series on show-based policymaking published by the Pew-MacArthur Results Beginning Initiative, identifies four central steps that state and local governments can have to strengthen this implementation effort:

- Require agencies to assess community needs and identify advisable evidence-based interventions.

- Create policies and processes that support constructive implementation and monitoring.

- Back up service providers and staff through training and technical aid.

- Create systems to monitor program implementation and improve functioning.

Implementation: A missing piece of the evidence-based puzzle

Over the past two decades, a growing body of inquiry has focused on the implementation of bear witness-based programs. What happens when interventions that have been rigorously tested and constitute effective in the context of controlled studies are put into practise in real-world settings?two This enquiry has consistently shown that how these programs are delivered is critically of import; those that fail to attach to their intended design are less likely to accomplish predicted outcomes.3 Summarizing the research findings from nearly 500 evaluations of prevention and wellness promotion programs for children and adolescents, i recent report estimated that interventions that were implemented correctly achieved effects that were ii to three times greater than programs where meaning problems with implementation were plant.four

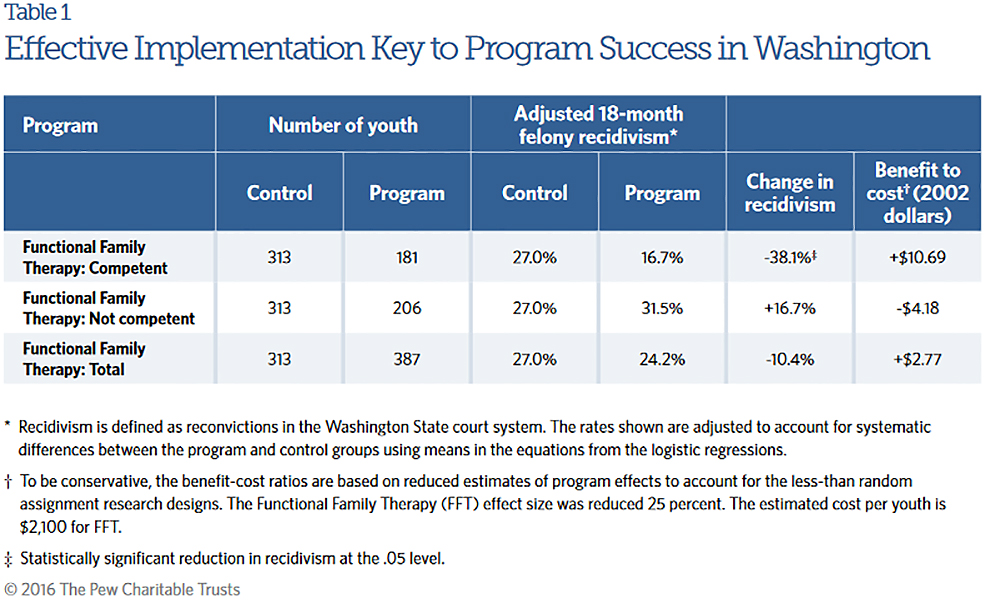

The state of Washington encountered this dichotomy after investing in four evidence-based interventions focused on reducing recidivism among youth in the juvenile justice system. The state initially funded the programs after an analysis by the Washington Land Institute for Public Policy (WSIPP) predicted that they would be highly toll-effective in treating juvenile offenders. After the programs had been in place for several years, the Legislature directed WSIPP to evaluate them to decide if they were achieving the predicted outcomes. The evaluation found that the programs were effectively reducing recidivism in locations where providers followed treatment protocols. In contrast, recidivism had really increased in locations where providers were failing to attach to the program models.

For instance, the evaluation found that ane of the programs, Functional Family unit Therapy, had reduced recidivism by 38.1 per centum and generated benefits of $x.69 in reduced crime costs for each dollar spent on competently implemented handling. Where treatment protocols were not followed, recidivism increased past 16.7 pct, costing taxpayers $4.18 for each dollar spent.5 Rather than cutting the programs, the Legislature decided to meliorate implementation and mandated that agencies develop standards and guidelines to ensure that juvenile justice programs were delivered finer.6

Why governments struggle with implementation

Governments encounter difficulties for several reasons when overseeing the implementation of bear witness-based programs. Start, many interventions (especially those that are testify-based) are complex and involve multiple entities, including government agencies, service providers, and program developers, all of whom must cooperate in service commitment. Also, fifty-fifty the nigh widely used evidence-based programs are intended to serve only specified populations, at recommended treatment levels, and in supportive environments. Successful implementation cannot be taken for granted and requires significant planning, management support, and leadership at both the arrangement and provider levels.

Second, it can exist hard to deliver services in existent-world settings. For instance, some bear witness-based programs may specify that services are to be conducted but by certified nurses or therapists, yet personnel with these qualifications may exist difficult to hire in areas where clients live. Oftentimes it can be unclear which aspects of an bear witness-based program can be modified to see the needs of particular communities and populations while still producing predicted results. Evidence-based programs often provide only limited guidance on these questions, leaving program managers to balance fidelityvii to program design with the applied challenges they meet in their communities.8 Understanding what adaptations can exist fabricated—and when such changes may touch outcomes—tin make the difference betwixt a successful plan and one that is ineffective or even harmful.9

Finally, securing policymaker support for investments in program implementation can be challenging. Funding for staffing, training, technical help, and monitoring and reporting systems is frequently among the first items cut under budget reductions in order to preserve direct services to clients, if these measures are funded at all. However, without these investments in capacity, governments chance much greater spending on programs that may fail to reach intended outcomes if ineffectively delivered.

How government can support effective programme implementation

Governments play several critical roles in program implementation. These include establishing procedures for how programs are selected, creating a management infrastructure that enables constructive implementation, supporting programme providers through training and technical assistance, and developing systems that rails implementation and outcomes and support ongoing quality improvement. Fortunately, regime agencies do not need to bear out these tasks on their own but can apply the expertise of partners, including universities, provider organizations, program developers, and technical assist intermediaries.

Key steps for supporting effective program implementation

State and local governments can take iv key steps to strengthen implementation of evidence-based programs.

Step ane: Crave agencies to assess customs needs and identify advisable bear witness-based interventions.

Before a programme is implemented, governments should ensure that the intervention is a skillful fit for the trouble being addressed. They should carefully assess the community needs and identify evidence-based programs that have been shown to exist effective in achieving the desired outcomes in similar contexts.

Carry needs assessments to understand problems and service gaps. It is important for key stakeholders to develop a shared understanding of the specific issues facing communities, such as gaps in currently available services.x The option of which programs to implement should exist based on a clear vision of the desired outcomes and the underlying causes of the problems, which can vary from ane customs to the next. To achieve this understanding, governments should deport a formal needs cess that gathers data about target populations, the prevalence of key problems, and the risk factors that could be addressed through interventions.

Governments tin can utilise one of several national models when conducting these assessments. For example, the Communities That Care (CTC) model, endorsed by the Substance Abuse and Mental Health Services Assistants (SAMHSA), provides a framework for identifying youth needs using a school-based survey that collects data on fundamental risk and protective factors among youth in grades 6 through 12. The survey data are then used to pinpoint problem areas that could be addressed by evidence-based programs.11

Pennsylvania adopted the CTC model in the early 1990s and created more than 100 prevention coalitions beyond the land to identify and prioritize community needs. The coalitions used the CTC data-driven arroyo to build an understanding of local problems and key risk factors that could be addressed through evidence-based interventions, which informed their strategies for addressing these needs and led to the adoption of over 300 bear witness-based program replications across the state.12

Select testify-based approaches that accost identified needs. Once community needs are understood, the next pace is to assess and select programs that have been shown to exist effective in addressing these issues. Key resources for this cess are the national enquiry clearinghouses, such equally the National Registry of Evidence-Based Programs and Practices operated by SAMHSA, that compile lists of evidence-based programs. These organizations conduct systematic literature reviews, frequently examining hundreds or thousands of studies, to identify interventions that rigorous evaluations accept shown to be effective in achieving outcomes such as higher graduation rates and reduced criminal reoffending. Each clearinghouse typically addresses ane or two policy areas, such as criminal and juvenile justice, child welfare, mental health, educational activity, and substance abuse.13

Some policymakers mandate that interventions exist selected from those listed by the clearinghouses. For instance, the Utah Division of Substance Abuse and Mental Health requires that its funding be used to implement show-based programs listed past designated national clearinghouses, including Blueprints for Salubrious Youth Development, the Department of Juvenile Justice and Malversation Prevention Model Programs Guide, and the Communities That Care Prevention Strategies Guide.fourteen

Pace 2: Create policies and processes that support constructive implementation and monitoring.

To scale upwards evidence-based programs, governments must develop the direction infrastructure needed to facilitate effective program implementation. This includes creating standards or guidelines embedding these standards into contracts, and aligning them with administrative policies and processes to support effective implementation.

Develop implementation standards. Policymakers can institute mutual standards or guidelines for program implementation to ensure that providers meet a minimum level of competency in delivering services. Although some requirements will vary depending on the specific bear witness-based intervention, other aspects of programme implementation are universal and can be embedded into these standards. These may include minimum requirements for hiring and training staff, providing services to the target population specified by the evidence-based programme provider, and ensuring that processes are in place to provide effective oversight of service delivery.

For instance, leaders in Washington land adult standards to implement evidence-based juvenile justice programs later an evaluation found that sites where programs were not implemented with fidelity had poor results. These standards govern 4 key elements of quality assurance—programme oversight, provider development and evaluation, corrective action, and ongoing outcome evaluation—and include protocols for hiring, staff training and assessment, and management and oversight of service commitment. Providers are required to consummate an initial probationary menstruation during which they receive training and feedback, and are then periodically evaluated. These implementation standards helped the state achieve greater reductions in law-breaking and juvenile arrest rates, compared with the national average, and a decrease of more than 50 percent in the number of youth held in state institutions.xv

Embed implementation standards or requirements into contracts. Agencies can build these standards into contracts to ensure that providers run across the required baseline levels of proficiency. In 2013, for example, New York state'southward Division of Criminal Justice Services (DCJS) issued a request for proposal for alternatives to incarceration that required providers to identify the specific testify-based interventions they planned to implement, provide detail on their screening and referral systems, and describe how they would attach to the programs' treatment protocols. The division now monitors providers' fidelity to these requirements equally office of a comprehensive procedure through which providers submit case-specific data to DCJS and undergo on-site reviews past tertiary-party monitors contracted by the state. The reviews assess the degree to which programs are implementing principles of constructive correctional interventions.xvi

Some agencies accept embedded requirements related to implementation allegiance in their provider guidelines, which oft encompass a broad range of contracted services. In 2014, New York'due south Function of Alcoholism and Substance Abuse Services updated its provider guidelines for prevention services, defining the strategies and activities necessary to reduce underage drinking, booze misuse and abuse, illegal drug corruption, medication misuse, and trouble gambling.17 The guidelines crave providers to implement programs with fidelity to the "core elements" of evidence-based services, including the target population, setting, and curricula content.

Align administrative policies and processes to support effective implementation. Implementing evidence-based programs often requires changes throughout service delivery networks. Existing authoritative processes should exist aligned with these delivery efforts. Otherwise, agencies and providers tin face conflicting mandates or inflexible payment systems that brand it difficult to effectively deliver critical services. Policymakers and bureau leaders can help by creating feedback loops that enable administrators, providers, and technical assistance staff to regularly share information and solve unanticipated problems.18

For case, the Colorado Department of Corrections recently adopted a new integrated case management organization to improve its planning and offender treatment services. In doing so, the department found that certain policies were not in alignment with the inquiry on what works regarding low-run a risk offenders. Specifically, the research indicated that less contact with depression-risk offenders leads to better outcomes. The section resolved the outcome by facilitating changes to administrative regulation standards regarding the frequency of contact to meliorate align policies and practices with the literature.

Step 3: Support service providers and staff through training and technical help.

Training and technical help are disquisitional to implementing new interventions and practices. Program staff demand to be trained on specific treatment protocols. Research shows that such training is about effective when delivered in multiple stages, including initial learning sessions followed by ascertainment and feedback by experts, with subsequent ongoing in-service training and coaching one time the program is up and running.19 Policymakers tin support this procedure past funding and establishing systems that railroad train staff on the delivery of evidence-based programs and practices; agency leaders can choose betwixt several options for the commitment of this training.

It is particularly important for program administrators to ensure that staff are appropriately trained to utilise screening and assessment tools designed to assistance friction match participants with the appropriate interventions. Fifty-fifty the most widely replicated evidence-based programs are effective only when treating certain populations. Without the appropriate screening and cess tools, agencies may refer participants to programs they practise not need and that are not effective in addressing their problems. "We frequently hear frustration from agencies who tried evidence-based programs just still didn't reach the outcomes they sought because the programs weren't delivered to the right population," said Ilene Berman, senior acquaintance with the Annie East. Casey Foundation's Evidence-Based Exercise Group.20

Determine the best vehicle for delivering training and technical assist. Governments accept several options for delivering training on evidence-based programs, such as using in-business firm personnel with expertise in these programs, contracting with program developers, or partnering with intermediary organizations. Some widely adopted programs, such as Multisystemic Therapy and Nurse-Family Partnership, offer training services to governments that implement them. Such plan developers have deep expertise in their interventions and ofttimes have detailed training curricula. Nevertheless, relying exclusively on program developers can limit an system's ability to develop its own expertise and may complicate preparation if agencies are implementing multiple evidence-based programs. Other options include leveraging the expertise of local researchers through a government-university partnership, or developing an evidence-based unit of measurement inside a regime agency.

Partner with a research university

Several states—including Maryland, Pennsylvania, and Washington—have established partnerships with inquiry universities to provide grooming and technical assistance to staff and providers. These implementation centers tin help back up community readiness assessments, provide preparation and technical assistance on testify-based programs, and oversee monitoring and quality comeback efforts.21 For example, the Establish for Innovation and Implementation at the University of Maryland was established in 2005 and is funded to provide training, implementation support, and evaluation services for select evidence-based programs across multiple policy areas, including juvenile justice and child welfare. The establish too provides technical assist and project management support to state agencies engaged in statewide initiatives. "Information technology'due south important to have multiple state and local agencies on lath as well as the provider community. … Collaboration beyond agencies is of import in order to coordinate existing efforts, develop new strategies, and make sure that anybody is getting the aforementioned information," said Jennifer Mettrick, director of implementation services at the institute.22

In Pennsylvania, the Evidence-based Prevention and Intervention Back up Center, or EPISCenter, provides technical help to communities and service providers to support the implementation of bear witness-based prevention and intervention programs. Since 2008, the heart—a partnership betwixt the state Committee on Crime and Delinquency and Pennsylvania State Academy, with funding from the commission and from the country Department of Human Services—has assisted in the establishment of nearly 300 testify-based plan replications in more than than 120 communities throughout the state. Experts from the eye provide technical assistance to local staff on implementation, evaluation, and sustainability, and assistance develop the infrastructure to monitor the program for allegiance to its original design.

Develop an evidence-based unit of measurement or division

Some other option used past some states and localities is to found specialized units within agencies that are charged with providing grooming equally well as overseeing plan implementation. These partnerships and units tin can help governments develop in-business firm expertise for a range of programs.

For example, Colorado's Evidence-Based Practices Implementation for Capacity (Ballsy) Resources Center is a collaborative effort of five agencies working in the state's adult and juvenile justice systems. The center was created by the Colorado Commission on Criminal and Juvenile Justice in 2009 and formalized through legislation in 2013.23 Housed in the Segmentation of Criminal Justice within the Department of Public Condom, the ix-person staff provides assistance to support effective implementation of evidence-based practices.

We alive by the motto that if you lot just do an evidence-based program and don't pay attending to implementation strategies, you lot're not going to get the results you lot want. Diane Pasini-Hill, director, Prove-Based Practices Implementation for Capacity Resource Center

The center is helping to build the capacity of organizations and to support effective program implementation. "We initially talked to implementation experts when showtime designing the programme, and they told u.s.a. that you can't just train people on evidence-based programs to get a practice integrated; you really need to go deeper," said Diane Pasini-Hill, the centre'southward manager. As a result, "we've transitioned into beingness more of a full implementation center as opposed to only coaching and grooming alone. Through working primarily with line staff and supervisors, we found that there were also many gaps to make this [training] effective on its ain. Nosotros live past the motto that if you just do an bear witness-based plan and don't pay attending to implementation strategies, you're non going to get the results you want."24

The Role of Implementation Teams

Regardless of which option is selected, governments should clarify the part of each partner involved in implementing a new programme or initiative, including program administrators, service providers, and intermediaries, to help minimize challenges. One common strategy that has proved effective in scaling up bear witness-based programs, specially in K-12 education, is the use of implementation teams, which typically include partners both inside and exterior regime. These teams play an important office throughout the process, helping to build buy-in for the initiative, create an infrastructure to back up implementation, monitor program fidelity, appraise outcomes, and solve problems by bridging the divide between policymakers and practitioners.25

"We act equally a neutral facilitator," said Matthew Billings, projection manager with the Providence Children and Youth Cabinet, who leads implementation teams to scale up 3 evidence- based programs in the Rhode Isle capital. "At the community level, there is often a lot of confusion near what evidence-based programs are and what aspects of the program can be tailored to meet the needs of the population we're serving. We work with providers to assemble their feedback on what's working and what's not. And then we can take that information to plan developers and ask them ... can these changes be made? Sometimes adaptations tin can be made and sometimes they tin't. Just it's very powerful for providers when they run into that their feedback is beingness taken seriously."26

Step 4: Create systems to monitor program implementation and improve performance.

The terminal key stride for governments seeking to successfully implement evidence-based programs is to fund and establish systems that regularly monitor providers to make sure they are delivering interventions with fidelity. This monitoring tin and then create feedback loops that use information to track outcomes and continuously improve performance.

Regularly monitor programs to ensure fidelity. As discussed, research has shown that bear witness-based programs in many policy areas, including substance abuse prevention, education, criminal justice, and mental health, must be appropriately implemented in order to achieve their desired outcomes.27 Plan managers have several tools for monitoring program fidelity. For example, they can use allegiance checklists and recorded observations to assess the extent to which providers attach to key elements of evidence-based practices.

Recently, tools have been developed that aim to streamline monitoring efforts by assuasive agencies to appraise fidelity beyond multiple programs. In Washington state, the Evidence-Based Practice Institute (EBPI) is developing a standardized process to monitor plan implementation and fidelity beyond iv extensively used prove-based child welfare programs. The institute was established in 2008 to assistance scale up show-based practices bachelor to children and youth served past the state'due south mental health, juvenile justice, and child welfare systems.

Its monitoring tools were developed in partnership with the Children's Administration, a partitioning of the state'due south Department of Social and Health Services.

"We had an observation that there are a scattering of bear witness-based programs [being widely used in the state] with fidelity, training, and supervision," said Eric Bruns, co-managing director of EBPI. "We ended upwardly focusing on four programs and looking at the different requirements beyond them and trying to figure out how we can accept some uniformity [in implementation], given there were specific program differences."28 The institute is evaluating the standardized procedure to determine whether it tin be expanded to measure out program allegiance beyond additional programs.

Use monitoring tools to identify and accost gaps in organizational capacity. Programs often fail to achieve expected results because the organizations delivering the services lack the chapters to perform critical tasks.29 For example, many evidence-based programs take strict handling protocols, which include staff qualifications (e.k., the Nurse-Family unit Partnership program specifies that registered nurses deliver services), service levels and elapsing, and staff-to-customer ratios. Leadership delivery to delivering these programs with fidelity is likewise important, as are well-functioning administrative processes such as grooming, monitoring, and data drove protocols.30

Agencies should ensure that providers have the demonstrated ability to meet the requirements and can use assessment tools to identify gaps in organizational capacity, and target training and assistance to address these needs.31 For example, many state and local governments use rating tools that assess both service quality and the capacity for organizations to effectively evangelize early childhood educational activity services. In some cases, organizations that receive college scores are eligible to receive higher rates, based on the assumption that they volition exist more probable to achieve skillful outcomes for the children they serve. Similarly, tools such equally the Correctional Plan Checklist are available to assess providers' readiness to deliver criminal justice programs and assess both organizational chapters and service quality, because factors such as leadership, staff qualifications, and quality balls systems.32

Nosotros tin't but tell programs what they're doing wrong without having resource to help them. Terry Salo, deputy commissioner, New York State Sectionalisation of Criminal Justice Services

New York state'southward Division of Criminal Justice Services (DCJS) is using the Correctional Programme Checklist to assess the extent to which service providers are adhering to key principles of evidence-based practice in their corrections and community supervision programs. Designed by researchers at the University of Cincinnati, the tool assesses both an organization's capacity to deliver effective services, including its leadership and quality of staff, and the content cognition of staff and management on testify-based practices. The cess uses data nerveless through formal interviews, observation, and certificate review to help identify strengths as well every bit areas for improvement. DCJS is using the tool to discover areas where providers may need to make changes or develop boosted capacity. The division then provides technical assistance to support them.33

"Our new approach is totally changing what we fund and what nosotros know about programs," said Terry Salo, deputy commissioner of DCJS. "At that place is not a week that goes by where something doesn't surface [through monitoring] where nosotros larn about the program and [are able to utilise that data to] appoint in course corrections. We can't just tell programs what they're doing wrong without having resources to help them."34

Create a feedback loop that supports program comeback. A disquisitional component of effective implementation is a stiff feedback loop in which service providers, government agency staff, and programme developers regularly share implementation data, identify areas for comeback, and act on information to ameliorate service delivery.35 These feedback loops work in two directions: programme providers collect information to mensurate implementation progress and then share the information with bureau managers and policymakers, who in turn use the data to make needed adjustments in policies and administrative practices to better support organizations involved in service delivery. Studies accept shown that efforts to calibration upwardly and sustain evidence-based programs have been largely successful when these practice-to-policy links are well established, while the opposite is truthful when these links are weak or nonexistent.36

These feedback loops tin can be supported by intermediary organizations. For example, in Pennsylvania, the EPISCenter serves every bit a liaison for the providers delivering prove-based services, the agencies charged with overseeing these services, and researchers and plan developers who identify key implementation requirements. The center's roles include interpreting information on effectiveness for agencies and providers; helping providers identify and collect issue and implementation data, and report them to oversight agencies; and working with the agencies to help align their policies to resolve issues and facilitate successful program implementation.

"The focus of implementation monitoring needs to be on quality improvement rather than simply contract compliance. … Otherwise, the organizations delivering the programs won't want to share data or be open up about any of the problems they're experiencing," said Brian Bumbarger, founding manager of the EPISCenter. "If [implementation monitoring] is all driven past the organization doing the contracting [due east.g., country or local regime], there are incentives for providers to endeavour to minimize or downplay implementation challenges, or just requite the funding agency the compliance data they want without really thinking about how information technology's helping them better their services. It has to exist a partnership rather than a i-sided transactional relationship."37

Utilise monitoring information to adapt interventions to fit local conditions. The need to arrange programs to real-globe settings while maintaining program fidelity continues to be a persistent claiming to scaling up evidence-based interventions. Though a large trunk of research underscores the importance of program fidelity to achieving intended outcomes, the research also shows that, in order to be sustainable, show-based programs may need some adaptations to accommodate issues that arise during implementation, including cultural norms and limitations on the availability of staff time and resources.38 Administrators tin can work with model program developers to identify which components of an evidence-based program tin can be modified while still maintaining fidelity, and then provide guidance to service providers and agencies on this event. When considering which interventions to implement, policymakers should besides carefully consider whether the program would exist a good fit within certain settings.

In 2009, the Oregon Legislature passed a pecker to utilize the nationally recognized Wraparound system of intendance for emotionally disturbed and mentally ill children, with statewide programs in place by 2015. A cardinal office of Oregon Wraparound is allegiance monitoring, overseen by the Oregon Health Authority. The National Wraparound Initiative has provided assessment tools to ensure that programs remain faithful to its ten bones principles. However, administrators may adapt other, noncritical aspects of the programme to fit local conditions and needs, which tin vary across the state. "The goal is to meet communities where they are so that this is sustainable. Any you're edifice needs to be part of the customs yous're working with. Yous [need to] maintain the fidelity of the model but also brand sure that it's tailored to the customs," said William Baney, former director of the Systems of Intendance Institute at Portland State University'due south Center for Comeback of Child and Family Services, which provides preparation and systems support to Oregon Wraparound.39

Conclusion

To fully realize the benefits of evidence-based programs, governments must invest in the capacity of systems and provider organizations to implement the programs effectively. Policymakers tin back up these efforts by providing leadership and, when necessary, redirecting resource to support the training, technical aid, supervision, and oversight necessary to ensure that programs are delivered effectively and with fidelity to their research design.

This brief is one in a series nearly the v key components of evidence-based policymaking, as identified in Evidence Based Policymaking: A Guide for Effective Government. The other components are program assessment, upkeep evolution, outcome monitoring, and targeted evaluation.

Endnotes

- Dean L. Fixsen et al., Implementation Inquiry: A Synthesis of the Literature (Tampa: Academy of Due south Florida, Louis de la Parte Florida Mental Health Institute, 2005). http://ctndisseminationlibrary.org/PDF/ nirnmonograph.pdf.

- Ibid.

- Ibid.

- Joseph A. Durlak and Emily P. DuPre, "Implementation Matters: A Review of Research on the Influence of Implementation on Programme Outcomes and the Factors Affecting Implementation," American Journal of Community Psychology 41 (2008): 327–l.

- Pew Centre on the States and MacArthur Foundation, "Better Programs, Ameliorate Results" (2012), http://www.pewtrusts.org/~/ media/ avails/2012/07/26/ pew_results_first_case_study.pdf.

- Ibid.

- Carol T. Mowbray et al., "Fidelity Criteria: Development, Measurement, and Validation," American Journal of Evaluation 24, no. 3 (2003): 315–twoscore. "Fidelity" refers to the extent to which delivery of an intervention adheres to the protocol or program model originally developed.

- Dean Fifty. Fixsen et al., "Statewide Implementation of Evidence-Based Programs," Exceptional Children 79, no. two (2013): 213–30.

- Julia E. Moore, Brian K. Bumbarger, and Brittany Rhoades Cooper, "Examining Adaptations of Evidence-Based Programs in Natural Contexts," Journal of Main Prevention 34 (2013): 147–61.

- Fixsen et al., Implementation Research; Allison Metz and Leah Bartley, "Active Implementation Frameworks for Program Success: How to Use Implementation Science to Improve Outcomes for Children," Zero to Iii 32, no. 4 (2012): 11–18. http://world wide web.iod.unh.edu/APEX Trainings/Tier 2 Manual/ Additional Reading/four. Implementation% 20article Metz.pdf.

- Communities That Care is an evidence-based model for delivering prevention services that was developed by J. David Hawkins and Richard F. Catalano. For more than information, visit http://world wide web.communitiesthatcare.net.

- Brian K. Bumbarger, director, EPISCenter, email bulletin, Nov. 25, 2015.

- There are several widely recognized national research clearinghouses, including the U.S. Section of Education's What Works Clearinghouse, the U.S. Department of Justice'due south CrimeSolutions.gov, Blueprints for Healthy Youth Development, the Substance Abuse and Mental Health Services Administration'southward National Registry of Evidence-Based Programs and Practices, the California Evidence-Based Clearinghouse for Child Welfare, What Works in Reentry Clearinghouse, and the Coalition for Bear witness-Based Policy.

- Utah Segmentation of Substance Abuse and Mental Health, Sectionalization Directives—Fiscal Yr 2014 (March 2013), http://dsamh.utah.gov/pdf/ contracts_and_monitoring/ Divison Directives _FY2014 Last.pdf.

- Pew Heart on united states and MacArthur Foundation, "Better Programs, Better Results."

- Leigh Bates, principal programme research specialist, New York State Sectionalization of Criminal Justice Services, interviewed July thirteen, 2015.

- New York State Role of Alcoholism and Substance Corruption Services, Addiction Services for Prevention, Treatment, Recovery, 2014 Prevention Guidelines for OASAS Funded and/or Certified Prevention Services, http://www.oasas.ny.gov/prevention/ documents/ 2014PreventionGuidelines.pdf.

- Lauren H. Supplee and Allison Metz, "Opportunities and Challenges in Evidence-Based Social Policy," Sharing Child and Youth Development Knowledge Social Policy Report 28, no. iv (2015). http://www.srcd.org/sites/default/files/documents/spr_28_4.pdf.

- Justice Enquiry and Statistics Association, Implementing Evidence-Based Practices (2014), http://world wide web.jrsa.org/projects/ ebp_briefing_ paper2.pdf.

- Ilene Berman, senior associate, Annie E. Casey Foundation's Testify-Based Do Group, email message, Jan. thirteen, 2016.

- Jennifer Mettrick et al., "Building Cantankerous-Arrangement Implementation Centers: A Roadmap for State and Local Child- and Family-Serving Agencies in Developing Centers of Excellence (COE)" (2015), Institute for Innovation and Implementation, University of Maryland, https://theinstitute.umaryland.edu/ newsletter/manufactures/bcsic.pdf.

- Jennifer Mettrick, manager of implementation services, Found for Innovation and Implementation at the University of Maryland, interviewed April 14, 2015.

- Encounter Colorado Business firm Bill 13-1129, https://cdpsdocs.land.co.the states/epic/ EpicWebsite/HomePage/HB13-1129.pdf.

- Diane Pasini-Hill, manager, Evidence-Based Practices Implementation for Capacity (Ballsy) Resource Middle, interviewed Aug. 17, 2015.

- Metz and Bartley, "Agile Implementation."

- Matthew Billings, project manager, Providence Children and Youth Chiffonier, interviewed Dec. 4, 2015.

- Linda Dusenbury et al., "A Review of Research on Allegiance of Implementation: Implications for Drug Abuse Prevention in School Settings," Health Didactics Inquiry 18, no. 2 (2003); Fixsen et al., "Statewide Implementation."

- Eric Bruns, co-director, Evidence Based Practice Institute (EBPI), University of Washington, interviewed June 23, 2015.

- Supplee and Metz, "Opportunities and Challenges"; Michael Hurlburt et al., "Interagency Collaborative Team Model for Capacity Building to Calibration Upwardly Evidence-Based Practice," Children and Youth Services Review 39 (2014): 160–68.

- Deborah Daro, Replicating Evidence-Based Domicile Visiting Models: A Framework for Assessing Fidelity (2010). http://world wide web.mathematica-mpr. com/~/media/publications/PDFs/earlychildhood/ EBHV_brief3.pdf.

- Ibid.

- Academy of Cincinnati, "Summary of the Evidence-Based Correctional Program Checklist" (2008), https://www.uc.edu/content/dam/ uc/corrections/docs/Training Overviews/ CPC Assessment Clarification.pdf.

- Terry Salo, deputy commissioner, New York State Division of Criminal Justice Services, interviewed July 13, 2015.

- Ibid.

- Supplee and Metz, "Opportunities and Challenges."

- Fixsen et al., "Statewide Implementation."

- Brian K. Bumbarger, manager, EPISCenter, interviewed March nineteen, 2015.

- Dusenbury et al., "A Review"; Fixsen et al., "Statewide Implementation."

- William Baney, former director, Systems of Care Institute, Portland Country Academy, interviewed March 17, 2014.

0 Response to "Quick Peek at Active Implementation Framework the Art and Sciene of Implementiation"

Post a Comment